Oloris

Scent-borne light for a shared sensorium. R&D, Pitch Studios + Gentle Systems

Across centuries of technological progress, scent has remained the least represented sense. Photography gave structure to vision, recording transformed sound, and haptics allowed touch to enter the digital world. Smell stayed outside that frame—too chemical, too bound to emotion, too difficult to measure. Attempts to capture it, from nineteenth-century scent organs to modern electronic noses, have struggled to create convincing results. Translating smell always involves translating feeling, a process without fixed measure.

Smell shapes emotion and memory with immediacy. It activates recognition long before language intervenes. Descriptions rarely match experience; we borrow from touch or taste—soft, sharp, sweet—without capturing the molecular reality.

Oloris looks at how scent can be sensed, interpreted, and expressed visually in a structured way. The work focuses on turning chemical signals from the air into data that can drive shifts in colour, movement, and texture, offering a practical method for showing how a scent behaves without treating the output as a direct reproduction.

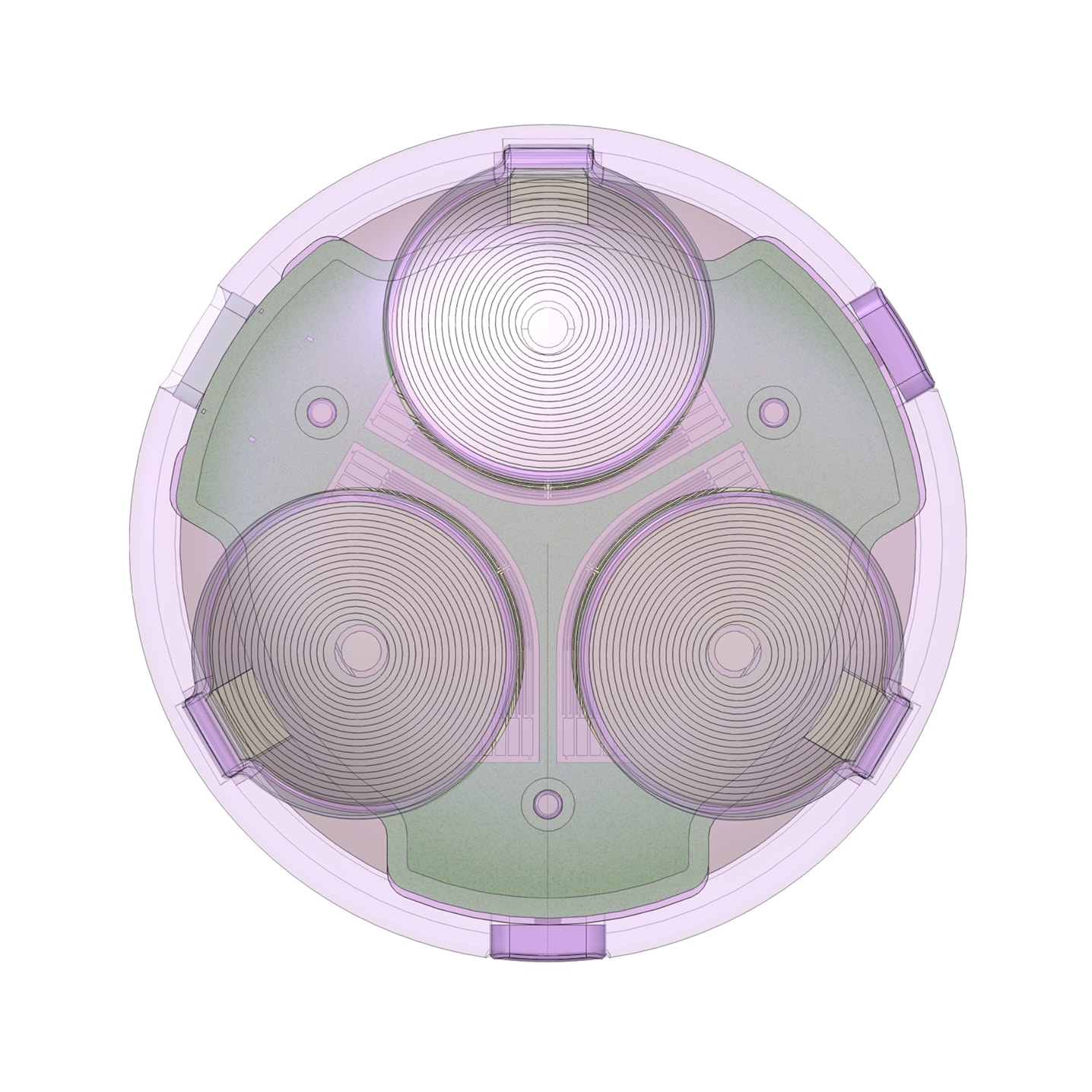

At its centre sits a carbon-based olfactory component built around a microarray of nanotubes. These nanoscale structures change their electrical resistance when exposed to volatile compounds. A trained machine-learning model interprets these variations to classify scent profiles, which then feed a visual generation system translating that data into movement, colour, and texture—a live representation of the surrounding air.

Each detected scent generates a data signature linking chemical identity with emotional tone. The system maps that signature to visual behaviour—continuous shifts in light, motion, and density—offering a direct way to perceive what the air contains. The output carries both emotional and analytical qualities: expressive in colour and rhythm yet governed by precise rules connecting each parameter to measurable data.

The visuals update continuously as the sensor reads new data, showing how scent composition changes over time instead of capturing a single moment.

A chain of interpretations.

The system operates through successive conversions that create a digital form of synaesthesia, linking smell and sight. The combination of scent and visual response strengthens memory, enabling experiences to be recorded, revisited, and shared. Once digitised, the visuals become practical references for scent—records that can be archived, combined, or communicated.

1

Sensing The nanotube field reads changes in air composition.

2

Interpretation The machine-learning model translates this data into a perceptual scent profile.

3

Translation The visual engine transforms the profile into motion, colour, and light.

4

Perception The user reads the output, forming an intuitive sense of the scent without needing to smell it directly.

This interactive prototype allows you to get a feeling of how different scents are represented visually. It works as both a technical proof and a design study, illustrating how olfactory sensing could integrate into future connected hardware.

A visual vocabulary for scent.

Complexity

Complexity indicates how many different compounds make up the scent profile and how layered the signal appears. This directly affects distortion and animation behaviour in the visualiser, with higher complexity introducing more distortion and a more active, variable animation.

Concentration

Concentration reflects how many scent molecules are present in the air at that moment and how dense the VOC signal is at the sensor. Visually, it controls chromatic aberration and vibrancy, with higher concentration producing stronger colour separation and a more intense overall palette.

Volatility

Volatility describes how quickly the detected compounds evaporate or disperse, influencing how fast a scent moves or fades. In the visual output, volatility drives the frost effect: higher values soften and diffuse the edges, mirroring rapid dispersion in the air.

Brightness

Brightness indicates how clear or defined a scent signal appears relative to background noise, often linked to how open or sharp the underlying molecules behave. In the visualiser, brightness controls bloom strength: higher values create a stronger halo around the form, giving the output a more open and defined appearance.

When scent features shift into visual form, each parameter shapes a specific behaviour in the image. Concentration increases chromatic aberration and vibrancy, giving the output stronger colour separation as the signal becomes denser. Volatility introduces a frosted look that softens edges as faster-moving compounds disperse. Complexity adds distortion and makes the animation more active, reflecting how layered the chemical profile appears. Brightness adjusts bloom strength, giving clearer signals a more luminous presence. Together, these behaviours create a consistent visual language that reflects how the sensor reads the air.

When we treat scent as information, it becomes something perceptible, recordable, and expressive. Beyond helping us build a better understanding of a sense we often neglect, this technology can add value to different contexts and industries.

Environmental awareness — keeping track of air quality and factors that influence the air we breathe.

Personal wellbeing — interpreting breath and skin compounds as shifting colour states.

Nutrition and cuisine — identifying food and proving information of provenence.

Art and spatial design — enabling installations that react to collective scent presence.

Memory and storytelling — embedding scent signatures into photographs or recordings.

Circularity and sustainability — helping automated systems sort materials with strong smells.

A new form factor for a new technology.

The development began with existing electronic-nose research which served as a reference for scale and performance. These kits detect a limited range of gases through nanotube arrays that register resistance shifts. From this foundation, the team modelled how a future version could interpret more nuanced scent compositions through advanced training and sensor refinement.

Read more on Notion — Technical Landscaping and Insights

Oloris combines software and hardware exploration to validate its sensing principle within a manufacturable, portable form factor. Early software work focused on experimenting with a resistance-based gas-sensing developer kit to study how signal variation could encode different scent signatures. These tests examined sensor sensitivity, signal stability, and pattern repeatability. Rather than building a full data pipeline, the priority was to observe and characterize these responses to guide the next steps: shaping both the visual representation system and the physical architecture of the device.

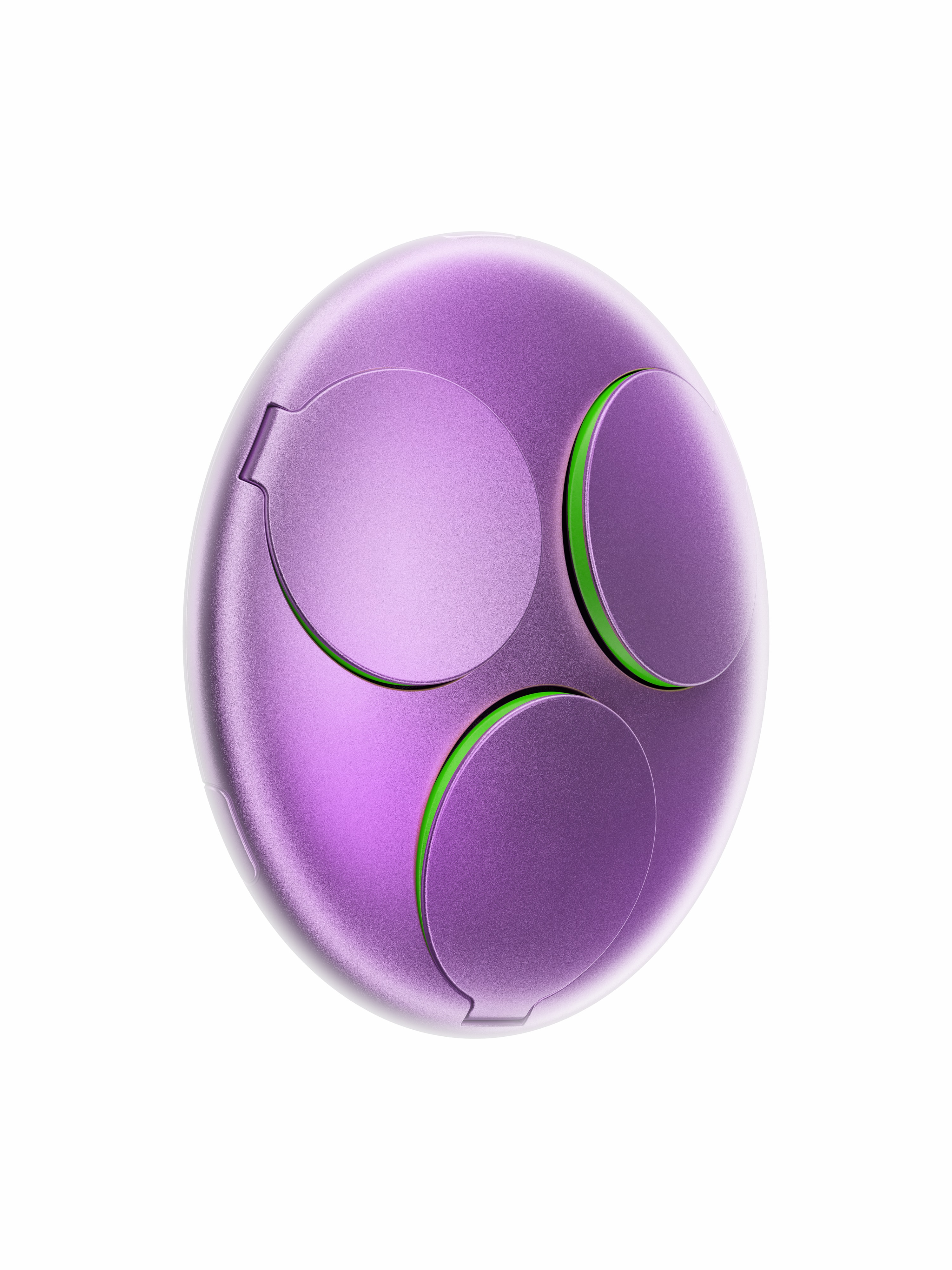

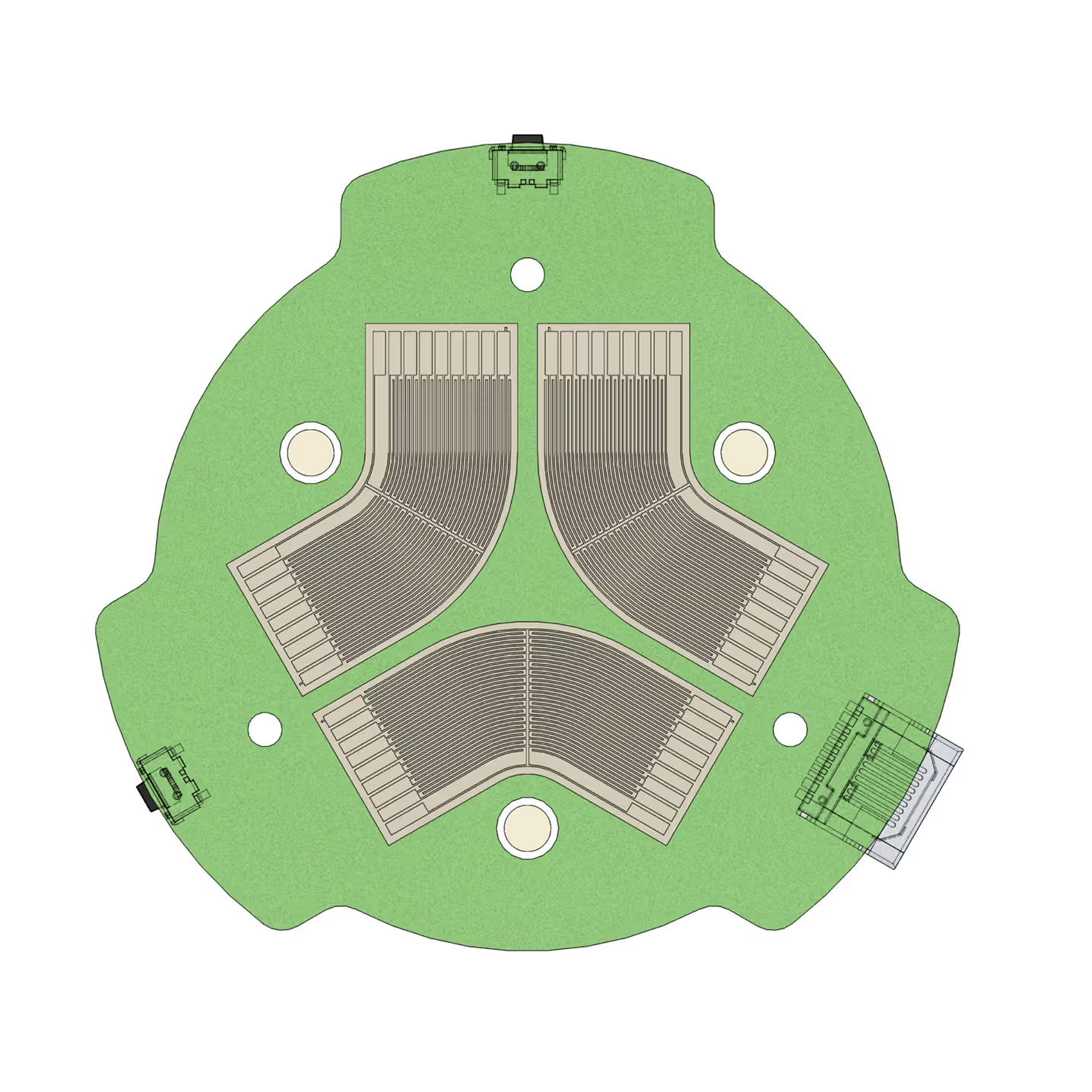

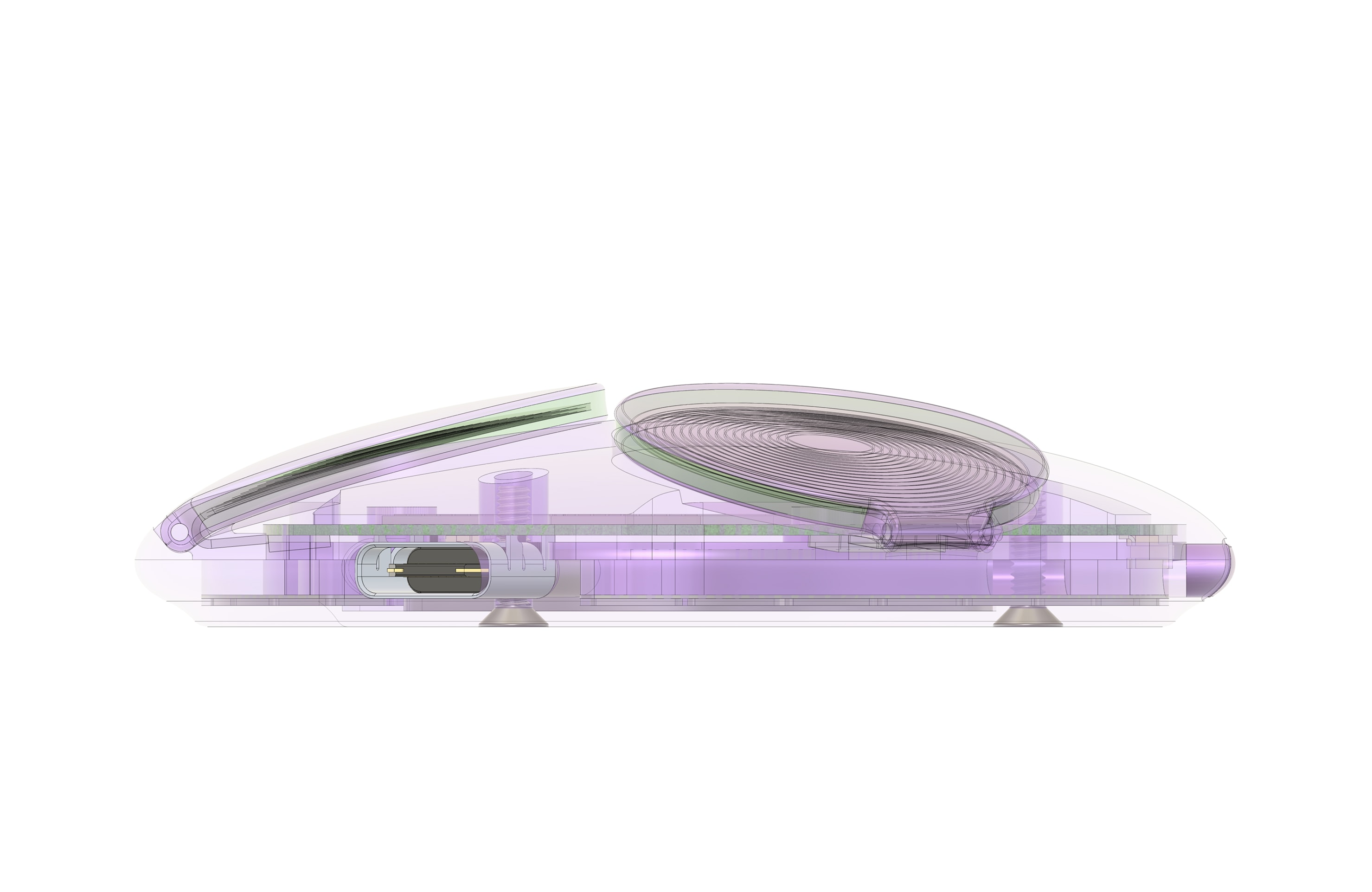

Building on these insights, the design engineering effort translated the sensing behavior into an integrated prototype. The geometry of the original sensor array was reworked to accommodate three separate arrays within the enclosure. This configuration introduced redundancy, improving reliability and signal consistency across sensing cycles. Power efficiency also remained a key requirement, guiding the selection of a microcontroller optimized for responsiveness and low energy consumption, enabling Oloris to operate continuously for weeks on a single battery.

The low-power technology means a small coin-cell battery can power the device for weeks at a charge.

3 arrays of 16 channel carbon nano tubes ensure nuanced readings.

Behind the scenes.

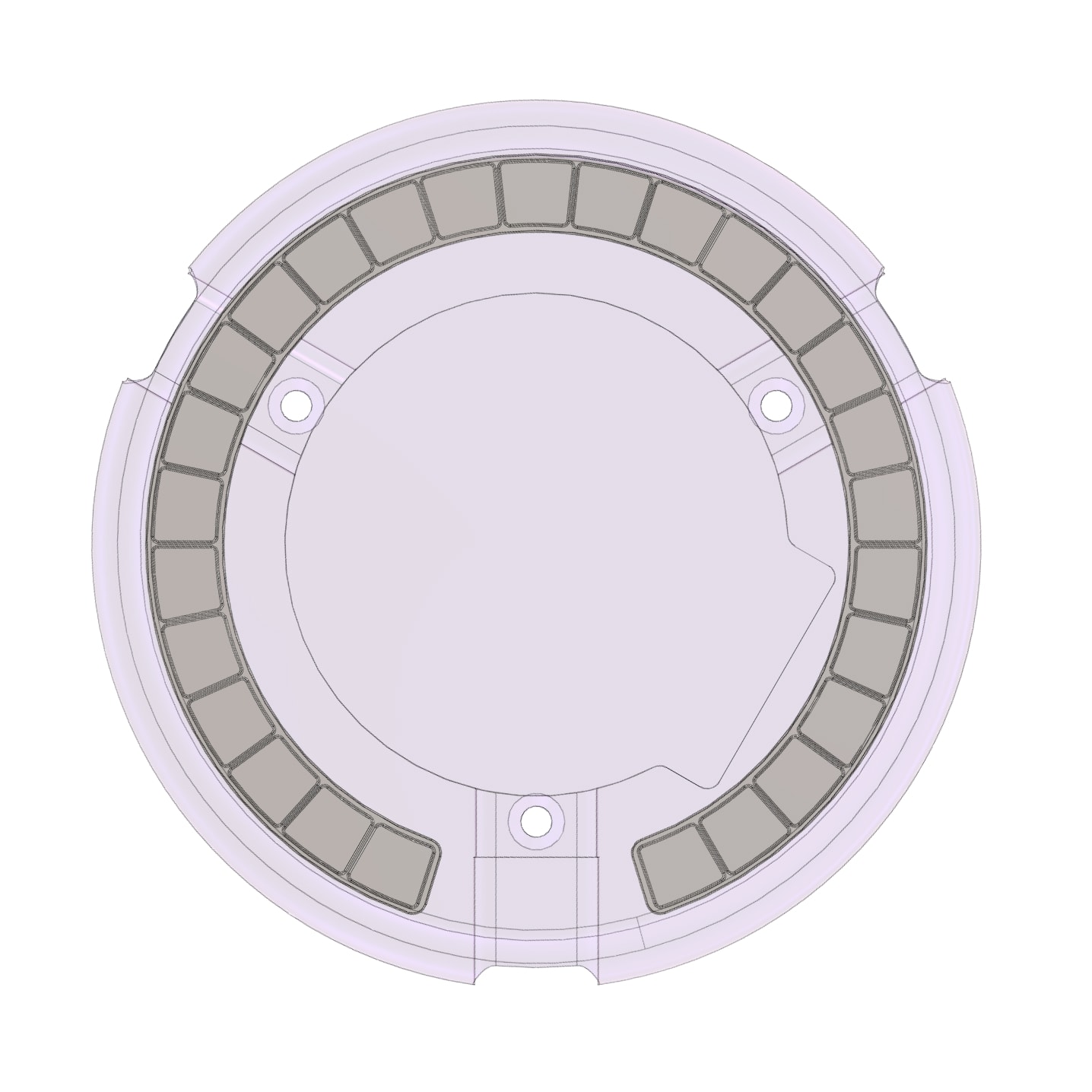

A magnetic coupling system allows it to attach to phones, wearables, or fixed surfaces—transforming into a portable or domestic sensor.

The enclosure was designed around airflow and protection: three hinged vents expose the sensors while shielding them from dust and moisture. To avoid conventional actuators, the team developed a coil-activated bi-stable hinge that can open or close without constant power, reflecting the device’s rhythm of active and resting states. A magnetic coupling ring allows the prototype to attach seamlessly to different surfaces—on a phone, a wall, or a wearable, positioning Oloris as a versatile, modular sensing unit rather than a fixed object.

We have developed a coil-activated hinge mechanism, allowing a non-mechanical activation. 3 hinged vents allow the device to take readings once prompted to.

A bi-stable hinge design allows for non-powered open and closed states. A trade-off could be made to enable a breathing animation.

Reflection

Oloris stands between material research and perceptual design, developed from the wish to give technology a finer sensory range. The collaboration joined scientific investigation with creative reasoning, showing how computation can interact with sensory phenomena such as scent and atmosphere.

Working together gave each discipline a distinct view of the same challenge—how to create something that senses rather than performs. The exchange between design, engineering, and research shaped the outcome more than any single tool or method. It revealed perception as a design material and showed that even air can hold structured intelligence.

Scent has always existed as something temporary. Oloris captures that movement, transforming what usually disappears into something visible, shareable, and durable.

Process

The process grew through constant dialogue between disciplines. Each team brought its own expertise and language—design, engineering, computation—while ideas moved easily across them. We worked through sketches, research, and prototypes as parallel threads feeding a single system. What tied everything together was a shared way of thinking: curious, iterative, and deeply collaborative.

Team

Pitch Studios

Creative research, concept development, narrative framing, writing, interface logic, visual direction and digital design.

Team: Christie Morgan & Alexa Chirnoagă

Gentle Systems

Technical research, concept development, industrial design and engineering — shaping the device, mechanical systems, and prototyping with digital nose technologies.

Photography, visualization and post-production — device images, use case scenes, supporting mood scenes.

Team: Lucas Teixeira, Aurélien Lourot, Andrea Marsanasco

Additional Contributors

Adrien Chuttarsing — algorithmic logic and machine learning model connecting chemical input with perceptual output, front-end interface development.

Arvind Sushil — device renders