Talking to Tools

R&D, LLMs x Automation, 2025

LLMs have made prompting part of our lives, from organizing our groceries to helping us write this very article. But so far they have limited influence in the world of things.

To explore how AI can help us coordinate complex actions in the real world, we built a mini movie set starring a not-so-scary dinosaur, and a pretty menacing chicken, replete with camera-wielding robotic arm that let us genuinely talk to our tools.

"Get a close-up of its teeth – and make it look gigantic."

You set out to film a subject, a toy dinosaur, let’s say. Instead of fiddling with complicated camera setups, what if you could just tell an intelligent system what you want, like a conversation between a director and a cameraman?

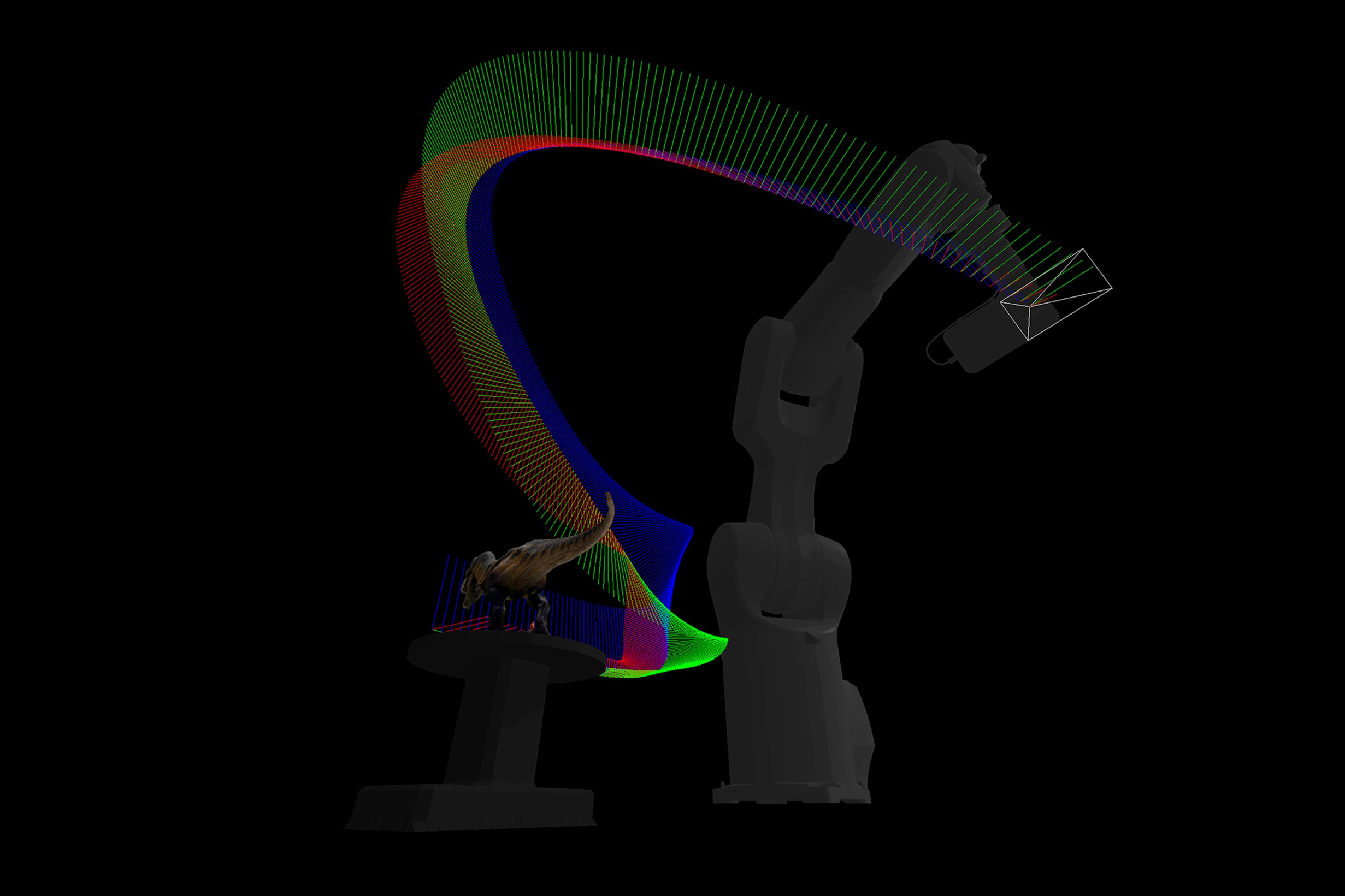

Illustration of a system flow: from prompting to recording.

Perception

For a system to effectively assist us in any task — be it filming, using home appliances, or industrial machines — it must genuinely understand the subject and environment, as well as have some understanding of itself. Through our investigation it became clear to us that to coordinate agentic AI actions in the real-world we need to run a ‘curiosity protocol’ surveying the environment, the subjects and understanding the object.

In this experiment, that means knowing that it is a robot, holding an iPhone that acts as a camera, acting as a Director of Photography, and shooting scenes or images of a toy dinosaur and chicken.

The system needs some level of abstraction to recognize one of our subjects as a dinosaur, that at the same time is a toy (which informs understanding of its scale and physical properties), and then meticulously break it down into its constituent parts: a head, legs, a tail, and tiny plastic teeth.

The system also needs to grasp nuance—that some lighting might make it look like a lizard while other setups emphasize its dinosaur features, or that its teeth could be perceived as a friendly smile or a menacing snarl. This deep, contextual understanding of the subject and its components is the crucial first step, forming the foundation upon which all subsequent actions and interactions are built. Ultimately, it has to distinguish it properly from its closest-living relative: the common chicken.

Curiosity Protocol

Surveying the environment brings its own set of challenges. How to develop a 3D understanding out of several 2D images? Are there two dinos or only one which moved between two images? As a robotic agent, how to be sure I'm not about to hit an object I haven't seen yet? How do I know I have discovered everything?

As a first step, let's assume the environment is static and contained in a known box, so that the robot can easily circle around it without risking collisions. The goal of the robotic agent at this stage is to answer three questions:

1. Which objects am I seeing?

To identify objects (a chicken, the head of a dino, etc.) on pictures, the agent can either use its own vision capability or make use of other AI models, e.g. Detection Transformers.

2. Where is each object in space?

If the camera held by the robotic agent provides depth information, the camera position, orientation and field of view, as well as the 2D object detection bounding box out of the Detection Transformer model can be combined with the depth of each pixel of the image to compute a 3D surface of the object, which can be used as a good approximation of the object position in space. If no depth information is available, a photogrammetry approach can be used to determine the position of each object in space.

3. How big is each object?

By combining either the various 3D surfaces of each object computed earlier, or again a photogrammetry approach, the agent can determine an approximate bounding volume of each object. The agent's knowledge of the world can also be involved in this step, e.g. a t-rex's tail is expected to have an elongated bounding box, whereas its head is more likely to fit in a cube or a sphere.

Simulation and Agency

Once the system has a rich understanding of the subject, we can build a simulated environment and make that knowledge accessible to our LLM. This will be the foundation for our prompting interface, where people can make a wish, and have that language translated into courses of action that combine tools and functions (of course subject to interpretation, failure, and iteration, as it also happens when we work with humans).

The second core component of this simulation is to bring in our tools this digital twin space. If the goal is to orient the dinosaur toy or a tool interacting with it, the system needs a model of its own "body"—whether that body is a precise robotic arm, a tripod-mounted sensor, or instructions for a human operator.

By integrating PyBullet in our simulation environment we can "rehearse" the robot moves before we play them out in the real-world. For a robotic arm holding the camera, this means tackling the complex dance of inverse kinematics – figuring out how all its joints need to turn to achieve a smooth, cinematic crane shot or a subtle dolly-in, all while keeping the dinosaur perfectly in focus and framed. If a planned camera path would result in the equipment bumping into the set or casting an unwanted shadow, the simulation catches it. The AI learns and revises its shot list, often in an iterative dialogue if connected to a user.

Positioning System

A cornerstone of how our experiments enables an LLM to plan effectively, especially for spatial tasks, is an unexpected Positioning System approach that combines spherical and Cartesian coordinates, instead of classic XYZ positioning.

This system is designed to bridge the gap between human-like descriptions of movement and the precise spatial data that simulations and robotic effectors require. It primarily leverages spherical coordinates for defining relative actions, with null or reference points often rooted in the center of the subject or its identifiable parts. For example, an LLM can plan an action like "orientate the camera 20 degrees to the right and 30 degrees up" relative to the dinosaur toy's head.

This is vastly more intuitive and resource-efficient for a language model compared to a scenario where it would need to directly calculate the new absolute Cartesian coordinates for such a relative adjustment. The latter would involve complex trigonometry—like Pythagoras, with squares and square roots—a type of computation that is cumbersome and less natural for an LLM's typical processing style.

The influential concepts of "System 1" and "System 2" thinking were prominently brought into mainstream understanding by Nobel laureate Daniel Kahneman. His work, particularly the book "Thinking, Fast and Slow," outlines this framework, which presents two main ways our minds process information and arrive at decisions: a fast, automatic, and intuitive mode known as System 1, contrasted with the slower, more deliberate, and analytical mode referred to as System 2.

0:00

0:00

Aurélien explains System 1 and System 2 thinking.

By allowing the LLM to "speak" and reason in a language of relative angles and offsets that are closer to natural human expression, it can formulate plans more rapidly, effectively skipping unnecessary calculations and reasoning.

Automated Jib

Robotic Arm

Drone